Designing Multi-Agent Systems: Drawing Lessons from OpenAI’s o1 Reasoning Model

How breakthroughs in OpenAI's reasoning models can influence the development of dynamic, multi-agent systems that can learn and adapt together.

In the previous article, we explored the anatomy of autonomous AI agents—single entities designed to handle specific tasks autonomously. While these agents can revolutionize individual workflows, the true potential of agentic AI lies in creating systems where multiple autonomous AI agents collaborate, learning and adapting together. This article explores how to design such multi-agent systems and the challenges they address.

Businesses today face mounting complexity in their operations, from supply chain disruptions to rapidly evolving customer expectations. Traditional automation systems, such as Software-as-a-Service (SaaS), Robotic Process Automation (RPA), and Low-Code/No-Code (LCNC) platforms, have promised transformation but often fall short of delivering true efficiency. These tools frequently require heavy manual intervention, struggle with adaptability, and create inefficiencies rather than solving them. Multi-agent systems offer a way forward: a network of specialized agents that interact, refine their outputs, and create a continuously improving system.

OpenAI’s recent announcement of its o3 reasoning model highlighted a significant breakthrough, achieving an unprecedented score on the ARC-AGI benchmark. Many view this as a significant step toward the broader vision of Artificial General Intelligence (AGI), where systems exhibit the ability to generalize and adapt across diverse challenges.

Insights from the latest research1 into the o1 model’s inner workings—such as its modular reasoning, dynamic context adaptation, and efficient knowledge integration—offer valuable lessons for creating networks of agents that interact dynamically and solve complex problems. By applying these principles, we can design more robust and adaptive multi-agent systems capable of tackling real-world challenges.

Designing Agentic Systems for the Next Levels of AGI

To understand the trajectory of advanced AI systems, we can use the framework that OpenAI uses internally to guide their path toward AGI development. Each level builds upon the last, moving from basic interaction to systems capable of managing complex operations autonomously:

Level 1 (Chatbots): Conversational AI is like the entry-level customer service representative who can handle FAQs, route inquiries, and manage basic tasks. These systems excel in providing quick, straightforward answers but lack the capacity for deeper problem-solving or strategic insights.

Level 2 (Reasoners) ← we’re here: Reasoners function like a skilled analyst in your organization. With OpenAI's recent introduction of o3, AI reasoning is reaching a level of intelligence where systems can tackle novel challenges effectively, paving the way for more autonomous decision-making and complex problem-solving.

Level 3 (Agents): Agents are comparable to a highly competent project manager. For example, an AI-driven supply chain agent could reorder inventory, adjust shipping schedules, and negotiate with vendors without human intervention.

Level 4 (Innovators): Innovators take on the role of a creative product designer or strategist. These systems generate novel ideas and develop groundbreaking solutions, such as conceptualizing entirely new business models or product lines based on emerging market trends.

Level 5 (Organizations): Organizational Equivalents represent an autonomous C-suite, capable of running the entire organization. These systems manage workflows, make strategic decisions, and adapt to market shifts—essentially functioning as an independent enterprise.

Achieving Levels 3 through 5 will require robust multi-agentic designs that inherit adaptability from reasoning models while introducing capabilities for creativity and strategic management.

The Four Key Principles for Multi-Agentic Systems

To bridge the gap between reasoning capabilities and fully autonomous multi-agentic systems, four foundational principles lay the groundwork for collaboration, learning, and adaptability among agents:

Policy Initialization: Policy initialization ensures agents start with a clear understanding of their role, equipped with the tools and training to succeed. Just as an HR department provides new hires with company guidelines and access to necessary software, this principle sets up agents with domain-specific expertise, ethical guidelines, and seamless integrations to begin their tasks effectively.

Reward Design: Reward design works similarly by aligning agents’ actions with the organization’s objectives. Agents are encouraged to prioritize collaborative outcomes and iteratively improve through feedback, much like how a sales team adjusts its strategies based on performance metrics.

Search Mechanisms: Search mechanisms empower agents to explore and refine strategies dynamically, ensuring that innovative and practical solutions emerge even under uncertainty. This is akin to iterating on a product concept until it fits market needs.

Learning Frameworks: Learning frameworks enable agents to continuously improve individually and collectively. These frameworks ensure that agents learn from their experiences and adapt to evolving challenges, fostering a culture of continuous improvement.

Let’s now explore these four key principles in greater detail.

1. Policy Initialization: Building Strong Foundations

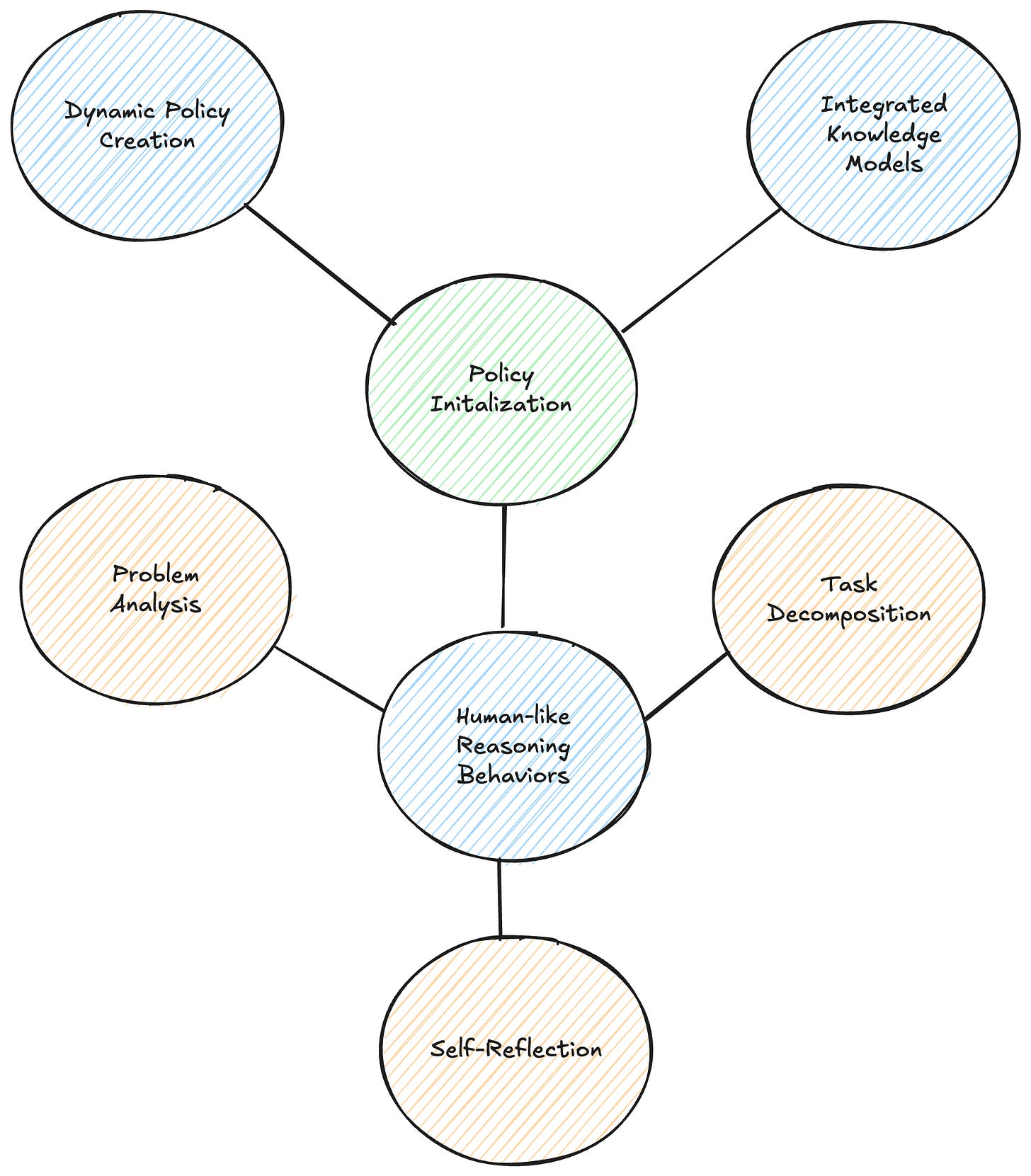

Policy initialization is the process of equipping agents with the foundational tools and frameworks necessary to operate effectively in complex environments. Drawing from advanced reasoning models like o1, this principle focuses on three core components:

Dynamic Policy Creation: Agents are initialized with policies that adapt through iterative evaluation and reasoning. Techniques like Monte Carlo Tree Search enable agents to refine strategies by balancing exploration and exploitation, which is critical for decision-making in novel scenarios.2 This process is further enhanced by token-level action granularity, where agents operate at the finest level of decision-making, selecting individual tokens from a vast vocabulary to construct coherent responses.3 Much like a startup founder refining their business plan based on market feedback, agents dynamically adjust their policies to optimize performance. Additionally, step-by-step reasoning and alternative proposal mechanisms allow agents to explore multiple solutions and self-correct when errors are detected, significantly improving accuracy and adaptability.4

Integrated Knowledge Models: Agents are initialized with modular knowledge bases that combine pre-trained datasets and domain-specific updates. This approach integrates cross-domain knowledge with real-time data for situational accuracy, enabling agents to remain highly relevant and effective in their operational domains.5 Consider a consultant who tailors their advice to each client’s unique needs. Pre-training establishes language understanding, world knowledge acquisition, and basic reasoning abilities, while instruction fine-tuning transforms these capabilities into task-oriented behaviors.6 For example, multilingual training data enables cross-lingual transfer, and exposure to diverse corpora fosters domain expertise in areas like mathematics, science, and programming.7

Human-like Reasoning Behaviors: Agents are equipped with sophisticated reasoning behaviors that mimic human problem-solving. These include:

Problem Analysis: Reformulating and analyzing problems to reduce ambiguity and construct actionable specifications.8 It’s similar to a CEO breaking down a complex challenge into clear, actionable goals.

Task Decomposition: Breaking complex problems into manageable subtasks, dynamically adjusting granularity based on context.9 This mirrors a project manager dividing a large project into smaller milestones.

Self-Reflection: Evaluating and correcting outputs, enabling agents to recognize flaws and refine their reasoning processes.10 It’s like a company conducting a post-mortem after a product launch to identify lessons learned.

Policy Initialization Lessons from the o1 Reasoning Model

The o1 model highlights several key insights that can inform the policy initialization of agentic systems:

Long-Text Generation: Agents must generate lengthy, coherent outputs to handle complex reasoning tasks. Techniques like AgentWrite and Self-Lengthen enhance long-text generation capabilities by fine-tuning on specialized datasets.11

Logical Orchestration of Reasoning Behaviors: Agents need to strategically sequence reasoning behaviors, such as deciding when to self-correct or explore alternative solutions. Exposure to programming code and structured logical data strengthens these capabilities.12

Self-Reflection: Behaviors like self-evaluation and self-correction enable agents to recognize and address flaws in their reasoning. This self-reflection capability is critical for improving accuracy and reliability.13

Policy initialization provides agents with the foundational policies, knowledge bases, and reasoning frameworks they need to perform effectively. This ensures that every agent operates seamlessly within the larger system, maintaining both efficiency and adaptability.

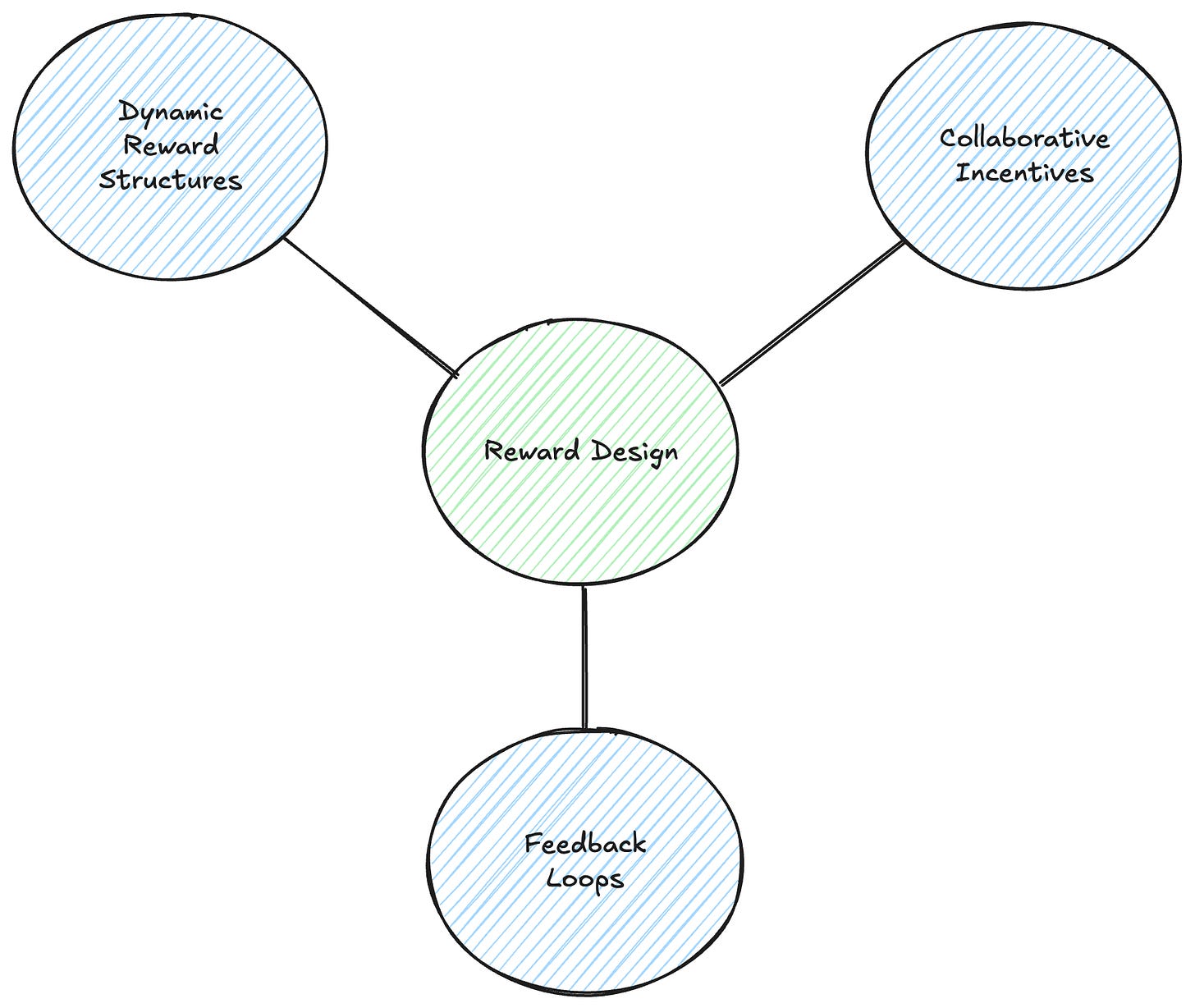

2. Reward Design: Aligning Actions with Goals

Reward design is the cornerstone of aligning agent behavior with system-wide objectives, ensuring that every action contributes to the overall goals of the organization. Drawing insights from reinforcement learning and advanced reasoning models, this principle involves three core concepts:

Dynamic Reward Structures: Incentive mechanisms must evolve alongside the system's changing goals and environments. Techniques like policy-gradient methods and adaptive reward shaping allow systems to fine-tune incentives dynamically, ensuring agents remain aligned with overarching objectives14. For instance, a customer service agent might initially prioritize response speed but later focus on customer satisfaction as the system’s goals evolve.

Collaborative Incentives: To foster teamwork, agents must be rewarded not only for individual success but also for contributions to collective outcomes. Methods for multi-agent credit assignment, as described in coordination algorithms, ensure agents work toward shared objectives like improving overall customer retention or maximizing team productivity. This is akin to how high-performing organizations balance individual bonuses with team-based rewards to encourage collaboration.

Feedback Loops for Iterative Learning: By providing agents with continuous feedback on their performance, systems can refine agent behavior iteratively. Concepts like reward modeling, as highlighted in scalable alignment research, allow agents to adapt through both reinforcement signals and post-task evaluations15. For example, a sales agent might adjust its approach to prospecting based on reward adjustments for achieving long-term revenue goals. This iterative process is similar to how businesses use performance reviews and real-time analytics to optimize employee output.

Reward Design Lessons from the o1 Reasoning Model

The o1 reasoning model might provide some valuable insights into designing reward systems for agentic systems, particularly for complex, multi-task environments. Here are key lessons and challenges:

Process Rewards for Complex Reasoning: For tasks like mathematics and code generation, where responses involve long chains of reasoning, o1 likely employs process rewards to supervise intermediate steps rather than relying solely on outcome rewards16. This ensures that agents are rewarded for correct reasoning processes, even if the final outcome is incorrect. Techniques like reward shaping can transform sparse outcome rewards into denser process rewards, enabling more effective learning17.

Learning from Preference and Expert Data: When reward signals from the environment are unavailable, o1 might leverage preference data (e.g., ranked responses) or expert data to infer rewards18. This approach, inspired by methods like Inverse Reinforcement Learning (IRL), allows agents to learn from human-like decision-making patterns, even in the absence of explicit feedback19.

Robust and Adaptable Reward Models: o1’s reward model is likely trained on a large and diverse dataset, enabling it to generalize across domains. It can be fine-tuned using few-shot examples, making it adaptable to new tasks with minimal data. This flexibility is crucial for agentic systems operating in dynamic environments.

While traditional businesses use a mix of monetary incentives, career advancement, and recognition to drive performance, reward design creates a sophisticated framework of reinforcement signals that shape agent behavior. This multi-layered approach mirrors how the most effective organizations balance individual achievement with team success, creating a self-reinforcing cycle of continuous improvement and collective achievement.

3. Search Mechanisms: Exploring and Refining Strategies

Search mechanisms empower agents to navigate uncertainty, test strategies, and refine their approaches dynamically. Based on insights from the research paper, these mechanisms can be broken into five core concepts:

Guiding Signals: Internal vs. External Guidance

Search relies on guiding signals to direct the exploration process. Internal guidance leverages the model’s own capabilities, such as model uncertainty or self-evaluation, to assess the quality of solutions. For example, self-consistency techniques use majority voting to select the most reliable answer from multiple candidates. External guidance, on the other hand, uses task-specific feedback, such as rewards or heuristic rules, to steer the search. This is akin to a business using customer feedback (external) versus internal performance metrics (internal) to refine its strategies.20Search Strategies: Tree Search vs. Sequential Revisions

Search strategies fall into two broad categories: tree search and sequential revisions. Tree search, like Monte Carlo Tree Search (MCTS) or Best-of-N (BoN), generates multiple candidate solutions simultaneously, exploring a wide range of possibilities. Sequential revisions, in contrast, iteratively refine a single solution based on feedback or self-reflection. Think of tree search as a brainstorming session where multiple ideas are generated at once, while sequential revisions are like polishing a single idea through multiple drafts.21Exploration vs. Exploitation

Effective search strategies balance exploration (trying new possibilities) with exploitation (leveraging known successful strategies). Techniques like epsilon-greedy algorithms ensure agents remain innovative while capitalizing on proven outcomes. For instance, during training, agents might explore diverse solutions (exploration) while also leveraging high-reward solutions (exploitation) to iteratively improve their policy. This is similar to a company investing in R&D (exploration) while maximizing profits from existing products (exploitation).22Training-Time vs. Inference-Time Search

Search plays distinct roles in training and inference. During training, search helps generate high-quality data by exploring diverse solutions, often guided by external feedback like task-specific rewards. In inference, search refines outputs through internal guidance, such as self-evaluation, to improve accuracy. For example, during training, a model might use MCTS to explore a wide range of solutions, while during inference, it might use sequential revisions to refine its final answer.23Efficiency and Scaling Challenges

Scaling search mechanisms introduces challenges, such as inverse scaling (where increased search degrades performance) and over-thinking on simple tasks. For example, using a reward model trained on limited data can lead to poor generalization during large-scale search. To address this, researchers propose techniques like speculative rejection (discarding low-quality solutions early) or combining tree search with sequential revisions to balance efficiency and performance. This is akin to a business optimizing its processes to avoid wasted effort while maintaining high-quality outcomes.24

Search Mechanism Lessons from the o1 Reasoning Model

The o1 reasoning model may offer some insights into optimizing search mechanisms, providing a blueprint for designing efficient and effective systems. Here are three critical takeaways:

Training-Time Search: Parallel Exploration with External Guidance

During training, o1 likely employs tree search techniques like Best-of-N (BoN) and Monte Carlo Tree Search (MCTS) to explore multiple solutions simultaneously. This parallel exploration is guided by external feedback, such as task-specific rewards or environmental signals like code execution results, ensuring alignment with real-world performance. Systems can adopt similar strategies to efficiently explore and validate solutions during training, leveraging external feedback to refine their approaches.25Inference-Time Search: Iterative Refinement with Internal Guidance

During inference, o1 might shift to sequential revisions, iteratively refining outputs using internal guidance like self-evaluation or model uncertainty. This minimizes computational overhead while improving solution quality. By adopting iterative refinement, systems can optimize decisions in real-time without relying on costly external feedback, ensuring efficiency and accuracy.26

Just as military planners use simulations to evaluate tactical approaches or chess grandmasters analyze multiple move sequences, search mechanisms enable agents to explore decision trees and optimize their choices through systematic evaluation. Together, these mechanisms ensure that agents navigate complex decision spaces with confidence and precision, much like a well-oiled corporate strategy team.

4. Learning Frameworks: Continuous Improvement and Collaboration

Learning frameworks in multi-agent systems are not just about continuous improvement; they encompass a broader set of principles that enable agents to adapt, innovate, and scale effectively. Here are the core concepts, enriched with insights from the research:

Reflective Analysis: Agents evaluate their own performance to identify strengths and weaknesses, refining their strategies iteratively. This is akin to a business conducting a post-mortem analysis after a project to understand what worked and what didn’t. For example, an agent might analyze its past decisions to improve future actions, much like a sales team refining its pitch based on customer feedback.27

Collaborative Knowledge Sharing: Agents share their experiences and solutions, creating a collective intelligence that benefits the entire system. This mirrors how cross-functional teams in a company share insights to solve complex problems. When one agent discovers an efficient solution, it’s shared across the network, enhancing overall performance—similar to how a company’s R&D department shares innovations with other teams to drive organizational growth.

Adaptive Learning Algorithms: Agents continuously update their strategies based on real-time data, ensuring they remain effective in dynamic environments. This is akin to a business adapting its operations in response to market trends. For instance, an agent might adjust its decision-making process based on new information, much like a retail company dynamically changing its inventory based on consumer demand.28

Search-Driven Exploration: Agents use search algorithms (e.g., beam search, Monte Carlo Tree Search) to explore high-value actions rather than relying on random sampling. This ensures that the training data is of higher quality, similar to how a company uses data analytics to identify the most promising opportunities. The iterative process of search and learning—where the system refines its strategies based on search results—mirrors how businesses refine their operations based on performance metrics.29

Hybrid Learning Approaches: Systems often combine multiple learning methods, such as behavior cloning and policy optimization, to achieve the best results. Behavior cloning focuses on replicating successful actions, while policy optimization learns from both successes and failures. This hybrid approach is like a business starting with proven strategies before experimenting with innovative approaches to achieve breakthroughs.30

Scaling and Efficiency: As systems grow in complexity, ensuring efficient learning becomes a critical challenge. The process of generating and analyzing data can be resource-intensive, much like a company’s R&D efforts consuming significant time and budget. To address this, systems can reuse data from previous iterations or focus on the most promising areas of exploration, similar to how businesses optimize their operations to reduce costs and improve efficiency.31

Dynamic Problem Generation: As agents improve, previously challenging problems become trivial, requiring the generation of more complex challenges. This is akin to a company continuously innovating to stay ahead of competitors. Systems must dynamically adjust their focus to ensure they’re always tackling meaningful problems, much like a business pivoting its strategy to address emerging market trends.32

Learning Framework Lessons from the o1 Reasoning Model

The o1 reasoning model may offer some insights that can inform the design of learning frameworks for agentic systems, particularly in policy initialization and training efficiency:

Warm-Starting with Behavior Cloning:

The o1 model suggests that behavior cloning is an efficient way to initialize policies, as it leverages high-reward solutions to quickly establish a strong baseline. This is akin to onboarding new employees with proven best practices to ensure they start on the right track. However, behavior cloning has limitations—it ignores lower-reward solutions, which can provide valuable learning signals. Thus, while it’s effective for warm-starting, it should be complemented with other methods for further optimization.33Transitioning to Policy Optimization:

Once the initial policy is established, the o1 model suggests transitioning to policy optimization methods like PPO or DPO. These methods utilize all state-action pairs, including lower-reward solutions, to refine the policy. This is similar to a company moving from standardized training programs to more dynamic, data-driven strategies that incorporate lessons from both successes and failures. PPO, while memory-intensive, offers robust performance, whereas DPO is simpler and more memory-efficient but relies on preference data.34Handling Distribution Shifts:

A potential challenge in o1 is the distribution shift that occurs when search-generated data (from a better policy) is used to train the current policy. This issue can also arise during policy initialization if the initial data doesn’t align with the system’s operational environment. To mitigate this, initialization can incorporate behavior cloning to narrow the gap between the initial and target policies, similar to aligning new hires’ skills with organizational needs through targeted training.35Improving Training Efficiency:

The o1 model highlights the possible computational cost of train-time search, which can slow down policy initialization. To address this, systems can reuse data from previous iterations or focus on high-value actions, much like a company optimizing its workflows to reduce costs and improve efficiency. This ensures that initialization is both effective and resource-efficient.36

In essence, just as a successful corporation thrives on continuous improvement, collaboration, and adaptability, multi-agent systems achieve their goals through reflective analysis, knowledge sharing, adaptive learning, and strategic exploration.

Challenges Ahead

As we move toward implementing sophisticated multi-agent systems, several significant challenges emerge that organizations must navigate carefully. These hurdles reflect the complexity of creating systems that can think, learn, and collaborate effectively at scale.

Lack of Published Research on o1

A major challenge in reproducing or understanding the o1 model is the absence of published research from OpenAI. OpenAI has not released any detailed papers or technical reports about the o1 model, forcing researchers and developers to reverse engineer its capabilities based on limited information and public demonstrations. This lack of transparency makes it difficult to fully understand the underlying mechanisms, architectures, and training methodologies used in o1. As a result, the research community must rely on open-source projects and independent studies to piece together the roadmap to achieving similar capabilities. This underscores the importance of open-source models and the need for more transparency in AI research.

Technical Scalability and Performance: Just as businesses face challenges when scaling their operations, multi-agent systems encounter unique obstacles as they grow. Counter-intuitively, adding more computational power doesn't always improve performance—in fact, it can sometimes degrade it. This is similar to how adding more team members to a project doesn't necessarily increase productivity. The way these systems process information, particularly when making complex decisions, creates bottlenecks that become more pronounced at scale. Consider how a video conference becomes unstable with too many participants—multi-agent systems face similar coordination challenges but at a much more sophisticated level.

Balancing Learning and Action: Much like developing effective performance metrics for employees, creating the right incentive structures for AI agents presents unique challenges. These systems need clear signals about what constitutes success, but defining these metrics becomes increasingly complex as tasks become more sophisticated. For instance, how do you balance a customer service agent's need to resolve issues quickly with the quality of their interactions? The system must learn from experience while maintaining consistent performance, similar to how businesses must innovate while maintaining day-to-day operations.

Orchestrating Collaboration: Perhaps the most significant challenge lies in managing how multiple agents work together effectively. This mirrors the complexity of coordinating different departments within a large organization—each unit needs to operate independently while contributing to overall goals. The system must manage resources efficiently, ensure smooth communication between agents, and maintain performance across the entire network. Just as a company needs robust systems to coordinate between sales, operations, and customer service, multi-agent systems require sophisticated frameworks to manage their interactions and collective learning.

Ironically, as we work to develop AI systems to solve these organizational challenges, we could find ourselves grappling with the same fundamental problems that have plagued organizations for decennia—just in a different form. Whether managed by humans or artificial intelligence, it seems the core challenges of running complex, adaptive organizations will remain a difficult problem to solve.

Zeng, Zhiyuan, et al. "Scaling of Search and Learning: A Roadmap to Reproduce o1 from Reinforcement Learning Perspective." 2024.

Brown, Tom B., et al. "Language Models Are Few-Shot Learners." 2020; Kaplan, Jared, et al. "Scaling Laws for Neural Language Models." 2020.

Radford, Alec, and Karthik Narasimhan. "Improving Language Understanding by Generative Pre-Training.", 2018; Brown, Tom B., et al. "Language Models Are Few-Shot Learners." 2020.

Wei, Jason, et al. "Chain-of-Thought Prompting Elicits Reasoning in Large Language Models." 2022; Puerto, Haritz, et al. "Fine-Tuning with Divergent Chains of Thought Boosts Reasoning Through Self-Correction in Language Models." 2024.

Radford, Alec, et al. "Language Models Are Unsupervised Multitask Learners.", 2019; Brown, Tom B., et al. "Language Models Are Few-Shot Learners." 2020.

Wei, Jason, et al. "Chain-of-Thought Prompting Elicits Reasoning in Large Language Models." 2022; Chung, Hyung Won, et al. "Scaling Instruction-Finetuned Language Models." 2024.

Scao, Teven Le, et al. "BLOOM: A 176B-Parameter Open-Access Multilingual Language Model." 2022; Yang, Shunyu, et al. "Tree of Thoughts: Deliberate Problem Solving with Large Language Models." 2023.

Deng, Yang, et al. "Prompting and Evaluating Large Language Models for Proactive Dialogues: Clarification, Target-Guided, and Non-Collaboration." 2023; Lee, Dongryeol, et al. "Asking Clarification Questions to Handle Ambiguity in Open-Domain QA." 2023.

Bursztyn, Victor S., et al. "Learning to Perform Complex Tasks Through Compositional Fine-Tuning of Language Models." 2022; Zhou, Denny, et al. "Least-to-Most Prompting Enables Complex Reasoning in Large Language Models." 2023.

Madaan, Aman, et al. "Self-Refine: Iterative Refinement with Self-Feedback." 2023; Cheng, Qinyuan, et al. "Can AI Assistants Know What They Don’t Know?" 2024.

Bai, Yuntao, et al. "Constitutional AI: Harmlessness from AI Feedback." 2024.

Quan, Shanghaoran, et al. "DMOERM: Recipes of Mixture-of-Experts for Effective Reward Modeling." 2024.

Sun, Qiushi, et al. "A Survey of Neural Code Intelligence: Paradigms, Advances and Beyond." 2024; Aryabumi, Viraat, et al. "To Code, or Not to Code? Exploring Impact of Code in Pre-Training." 2024.

Madaan, Aman, et al. "Self-Refine: Iterative Refinement with Self-Feedback." 2023; Cheng, Qinyuan, et al. "Can AI Assistants Know What They Don’t Know?" 2024.

Ng, Andrew Y., and Stuart Russell. "Policy Invariance Under Reward Transformations: Theory and Application to Reward Shaping.", 1999.

Christiano, Paul F., et al. "Deep Reinforcement Learning from Human Preferences.", 2017.

Cobbe, Karl, et al. "Training Verifiers to Solve Math Word Problems." 2021.

Ng, Andrew Y., Daishi Harada, and Stuart Russell. "Policy Invariance Under Reward Transformations: Theory and Application to Reward Shaping.", 1999.

Christiano, Paul F., et al. "Deep Reinforcement Learning from Human Preferences.", 2017.

Garg, Divyansh, et al. "IQ-Learn: Inverse Soft-Q Learning for Imitation." 2021.

Wang, Xuezhi, et al. "Self-Consistency Improves Chain of Thought Reasoning in Language Models." 2023; Snell, Charlie, et al. "Scaling LLM Test-Time Compute Optimally Can Be More Effective Than Scaling Model Parameters." 2024.

Browne, Cameron, et al. "A Survey of Monte Carlo Tree Search Methods.", 2012.

Cobbe, Karl, et al. "Training Verifiers to Solve Math Word Problems." 2021.

Sutton, Richard S., and Andrew G. Barto. “Reinforcement Learning: An Introduction.”, 1998; Anthony, Thomas, et al. "Thinking Fast and Slow with Deep Learning and Tree Search.", 2017.

OpenAI. "Learning to Reason with LLMs." 2024; Chen, Mark, et al. "Evaluating Large Language Models Trained on Code." 2021.

Brown, Bradley C. A., et al. "Large Language Monkeys: Scaling Inference Compute with Repeated Sampling." 2024; Gao, Leo, et al. "Scaling Laws for Reward Model Overoptimization." 2023.

Browne, Cameron, et al. "A Survey of Monte Carlo Tree Search Methods.", 2012.

Cobbe, Karl, et al. "Training Verifiers to Solve Math Word Problems." 2021.

Snell, Charlie, et al. "Scaling LLM Test-Time Compute Optimally Can Be More Effective Than Scaling Model Parameters." 2024; Wang, Xuezhi, et al. "Self-Consistency Improves Chain of Thought Reasoning in Language Models." 2023.

Silver, David, et al. "Mastering the Game of Go with Deep Neural Networks and Tree Search." 2016.

Sutton, Richard S., and Andrew G. Barto. “Reinforcement Learning: An Introduction.” 1998.

Silver, David, et al. "Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm." 2017.

Schulman, John, et al. "Proximal Policy Optimization Algorithms." 2017.

Rafailov, Rafael, et al. "Direct Preference Optimization: Your Language Model is Secretly a Reward Model." 2023.

Kumar, M. Pawan, et al. "Curriculum Learning for Reinforcement Learning Domains." 2010.

Touvron, Hugo, et al. "LLaMA: Open and Efficient Foundation Language Models." 2023.

Abhimanyu Dubey, et al. “The llama 3 herd of models.” 2024

Xie, Yuxi, et al. "Monte Carlo Tree Search Boosts Reasoning via Iterative Preference Learning" 2024.

Xu, Can, et al. "WizardLM: Empowering Large Language Models to Follow Complex Instructions." 2023.